Tag: Multilingual AI

-

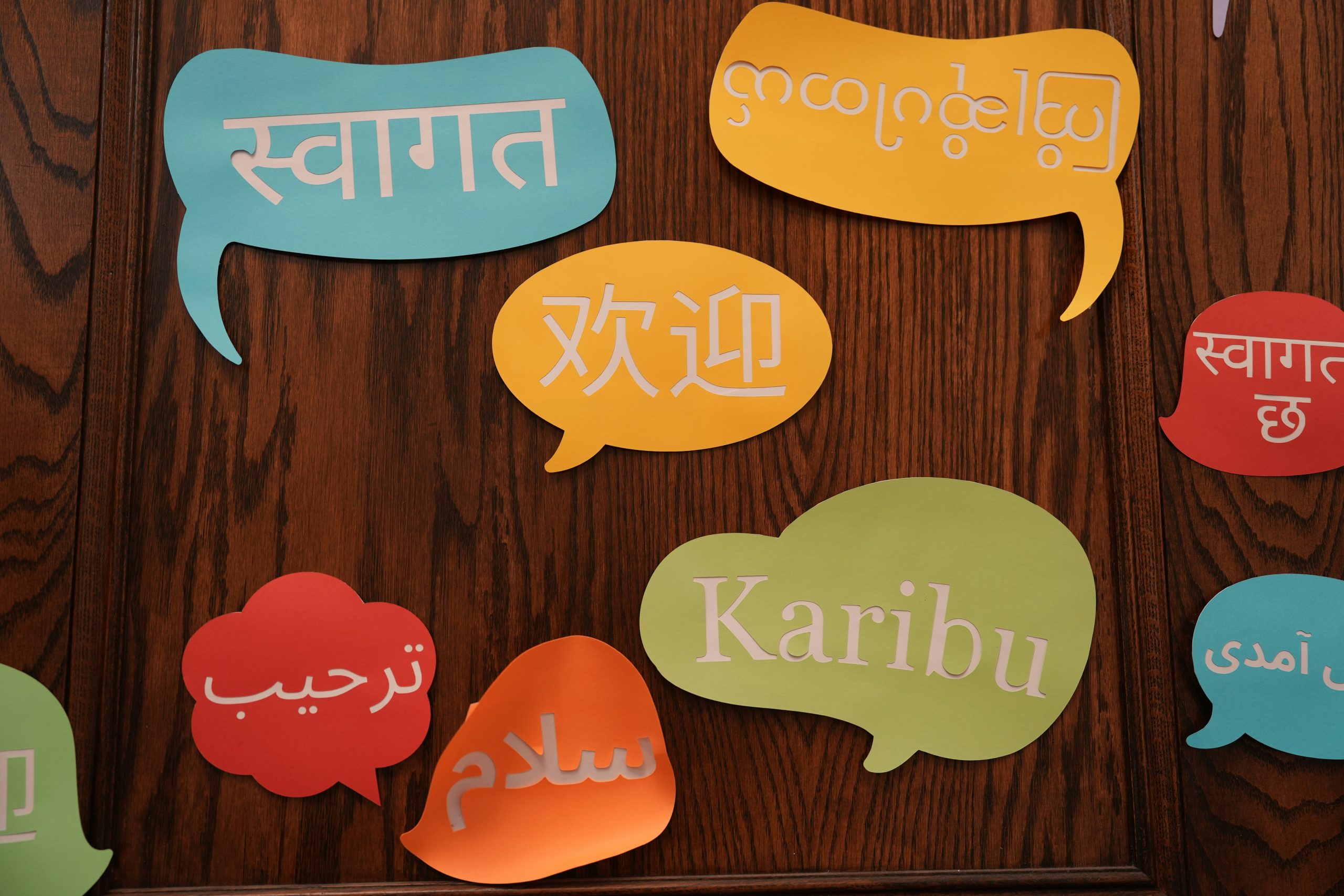

Most large AI models are trained primarily in English, which can lead to bias against less represented languages. The BLOOM project, however, was created to change this by making AI more multilingual and transparent. What Is BLOOM? BLOOM is a Large Language Model (LLM) released in 2022 by the BigScience collaboration, a group of over

-

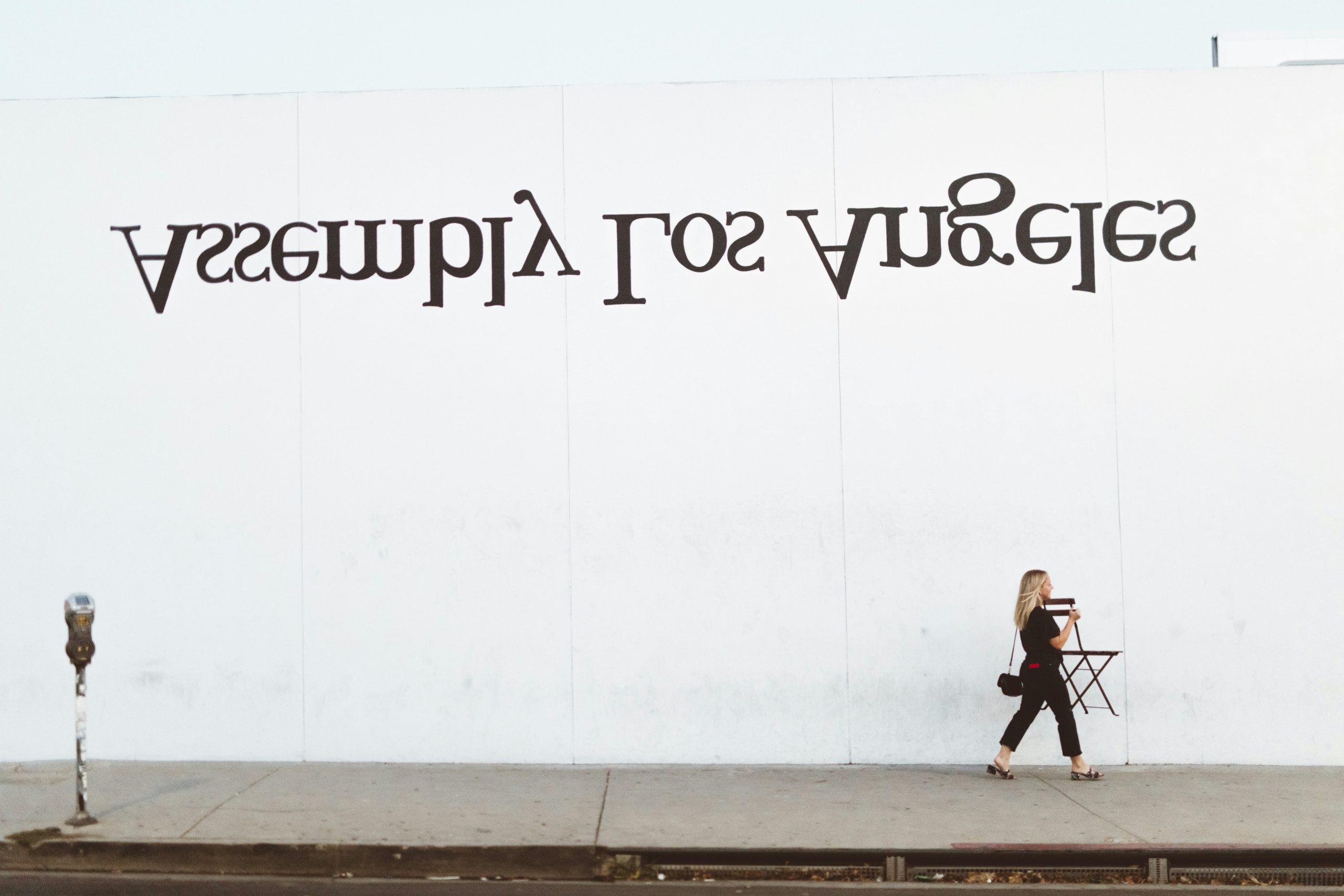

AI-powered translation tools like DeepL and Google Translate have revolutionized how we communicate across languages. But they’re not flawless. By looking at common translation errors, we can learn not only where AI struggles, but also how languages differ in structure, meaning, and culture. Literal Translations One of the most frequent mistakes is literal translation—where AI

-

AI systems are often presented as neutral, but they are only as fair as the data they’re trained on. In multilingual contexts, bias becomes especially visible—affecting gender representation, dialect recognition, and cultural sensitivity. Understanding these biases is essential for building and using AI responsibly. Gender Bias in AI Research shows that AI models can reinforce

-

AI has shown remarkable capabilities in language understanding and generation, but it also has notable weaknesses. Understanding where AI performs well, and where it doesn’t, is crucial for responsible and effective use. Where AI Excels AI systems, particularly large language models (LLMs), shine in: These successes stem from large amounts of training data and extensive

-

Writing effective prompts in languages other than English—like French, Spanish, or Arabic—takes care and precision. Multilingual prompt engineering ensures your AI model understands intent, style, and context in diverse linguistic settings. Why Language Matters in Prompting Multilingual LLMs often perform better when prompts align with their dominant training languages. As research shows, prompts crafted in

-

In our globalized world, many people naturally mix languages in the same sentence—a phenomenon called code-switching. But can AI tools like ChatGPT, Claude, or Gemini handle these mixed-language prompts effectively? Let’s explore how they perform, where they struggle, and what this means for multilingual communication. What Are Mixed-Language Prompts? A mixed-language prompt is a query

-

AI models like GPT‑4 and Claude are powerful—but are they equally effective in English and French? Let’s explore strengths, differences, and what it means in real multilingual use. How AI Performs in English vs French Studies show that GPT‑4 performs similarly in English and French on clinical tasks, with accuracy rates of 35.8% in English

-

Large Language Models (LLMs) like GPT‑4 and Claude are trained on vast amounts of text from the web, books, and other sources across many languages. This multilingual training enables them to recognize patterns in different languages, allowing both multilingual and sometimes cross-lingual capabilities. How LLMs Learn Language LLMs learn by processing massive datasets and adjusting