Tag: Bias in AI

-

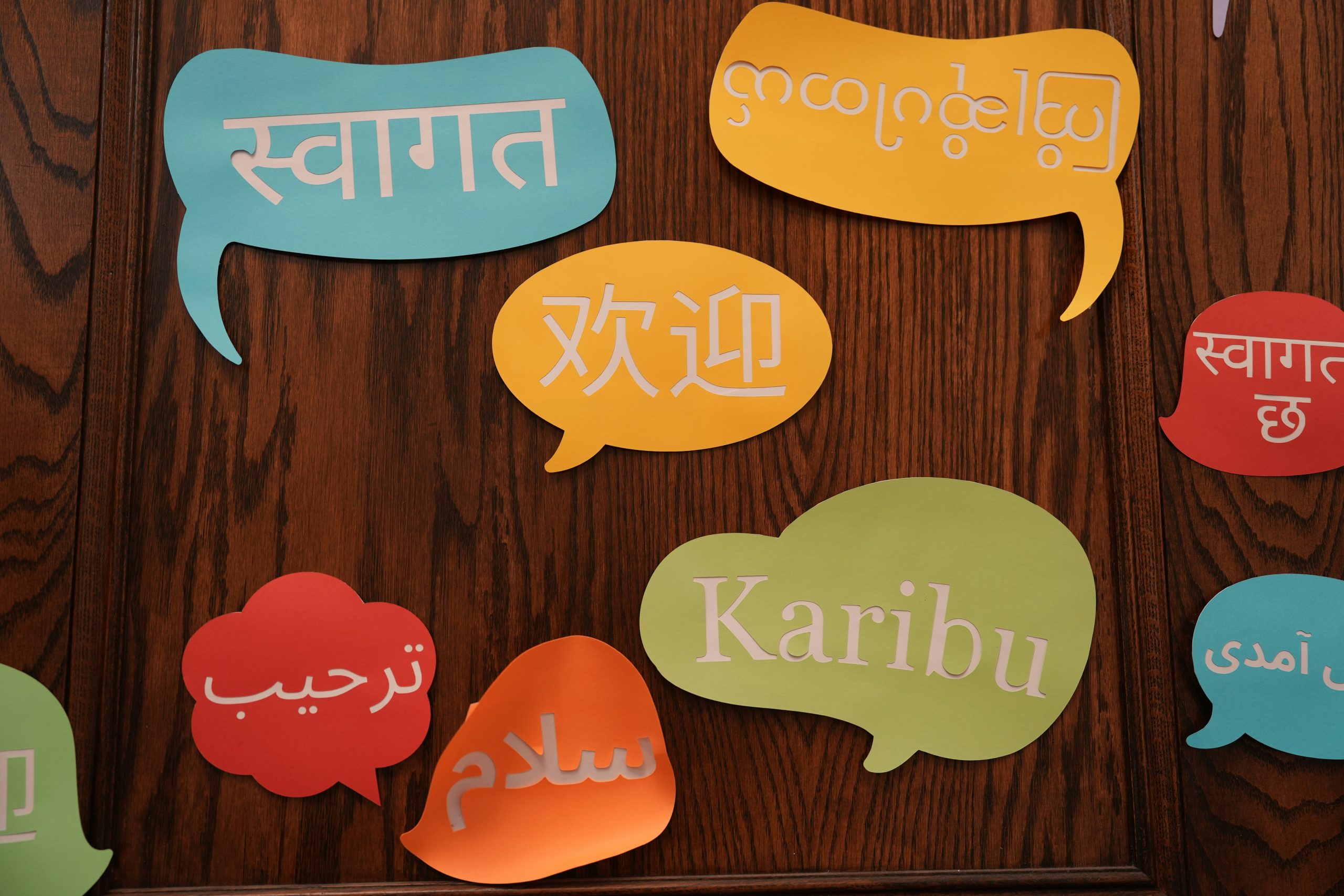

Many languages, such as French and Spanish, use gendered nouns. AI translation tools often default to masculine forms, reinforcing gender stereotypes. Google Translate has taken steps to address this. The Problem For years, typing “doctor” into Google Translate would return “le médecin” (masculine) in French, while “nurse” returned the feminine “infirmière”. This reflects patterns in

-

Most large AI models are trained primarily in English, which can lead to bias against less represented languages. The BLOOM project, however, was created to change this by making AI more multilingual and transparent. What Is BLOOM? BLOOM is a Large Language Model (LLM) released in 2022 by the BigScience collaboration, a group of over

-

Bias in AI is one of the biggest challenges in building fair, inclusive, and trustworthy systems. From reinforcing gender stereotypes to misrepresenting dialects or cultures, bias doesn’t just affect accuracy—it impacts real people. The good news is that there are strategies to reduce bias in AI and make its use more responsible. Understand Where Bias

-

AI systems are often presented as neutral, but they are only as fair as the data they’re trained on. In multilingual contexts, bias becomes especially visible—affecting gender representation, dialect recognition, and cultural sensitivity. Understanding these biases is essential for building and using AI responsibly. Gender Bias in AI Research shows that AI models can reinforce

-

AI has shown remarkable capabilities in language understanding and generation, but it also has notable weaknesses. Understanding where AI performs well, and where it doesn’t, is crucial for responsible and effective use. Where AI Excels AI systems, particularly large language models (LLMs), shine in: These successes stem from large amounts of training data and extensive